How to Evaluate Different Storage Solutions for Post, Where Real-Time Access Is Crucial

The media and entertainment (M&E) industry, and especially the post-production sector, seems to be an aggressively targeted market for NAS and SAN based solutions, as in the past three years more and more vendors have made inroads into the market. With solutions specifically designed for customers requiring real-time access and high throughput, new players are trying to conquer this special market segment. Some solution providers—including Promise Technology, Infortrend and Bright Technologies (where I am CTO) —developed entirely new dedicated products to tackle the growing market for shared media storage solutions. Others acquired the required expertise and reputation in the field, e.g. NetApp acquiring the LSI Engenio division, DotHill bundling up with Quantum and AutoDesk, and so on.

In general this is, of course, a good thing, as post-production facilities can leverage the fierce competition and benefit from a wider range of offerings. In the price war that comes as vendors fight for every customer, storage solutions become more affordable on various budgets. After deciding whether a NAS or a SAN is the better fit for a particular set of needs—a process that requires a lot of consideration of many facts, figures and workflow characteristics. too much to cover in this article—the customer faces the challenge of picking the right storage vendor. Companies can pick and choose between very affordable solutions based on SATA/nearline SAS 7200 RPM drives and a single controller, mid-range RAIDs with true SAS drives with 10K or 15K RPM and active/active dual RAID controllers, and all the way up to enterprise solutions equipped with SSDs, hybrid SSDs or flash memory, or controllers with an I/O booster. A lot of vendors accommodate the common request to start small with the potential to grow. DDN, for example, offers fairly unique, yet rather pricy, solutions that can grow virtually infinitely due to a unique controller design where 1200 drives can be controlled by only two controller nodes. Other companies, including DotHill, Nexsan and SGI (to name only a few), manage future growth the more traditional (and less expensive) way, with daisy-chained enclosure expansions or by providing the option to easily add additional RAIDs later on.

With all these vendors bringing interesting solutions to the market, but all following a slightly different approach, it can be fairly hard to determine which storage solution is the right one for your particular workflow. An even more aggravating factor is that often, at least in my personal experience over the past seven years, a customer is provided with different vendor quotes that compare apples and oranges, making it not only hard but almost impossible to pin down which solution will really meet your expectations and, more importantly, your requirements. Depending on the scale, a mature and professional fibre-channel based SAN setup can be a serious investment, so you want to be sure that the system will show a good ROI over time, running smoothly for several years instead of just a couple of months.

With that in mind, NAB provides the perfect opportunity to shop for SAN storage solutions. If you have a proper set of smart questions to ask when looking for the right solution, it will be a lot easier to find the perfect solution. The most important questions that you have to ask yourself are these:

- How many clients will be connected to the storage pool?

- How many streams (not bandwidth!) will each and every client need, right now and in the future?

- Most importantly, how many concurrent streams have to be provided to keep your workflow running like clockwork?

The demands placed on your facility could increase in the future, so you don’t want to invest in a static solution that you might outgrow sooner than you expect. On the other hand, you don’t want to over-spec (and over-pay) for a solution that you might never use to its full extent.

Never Mix Up Throughput or IOPS with Stream Performance

The most important mistake to avoid when evaluating a SAN (or NAS) storage solution is also the most common one: confusing throughput or IOPS with stream performance. Throughput or high IOPS will not provide you with the performance you need in a workflow with real-time access requirements.

And this is where it becomes tricky. Unfortunatelym not all storage vendors possess the expertise or experience to really understand the difference between the requirements of their standard industrial customers and their customers in the M&E or post-production sector. While industrial customers consider a storage setup with high throughput and IOPS to be high performance, customers in M&E need high (real-time) streaming performance. Bandwidth and IOPS are secondary, if not entirely irrelevant. Post-production applications working with sequence-based raw (single frame) material write to and read from the storage sequentially, as opposed to unstructured IT environments where data is read and written randomly.

A pretty common and recurring situation when it comes to buying storage could look like this. A customer expects to have eight attached clients in total, six of which need real-time read/play in 2K raw, while two clients will require 4K raw access. Most customers do the math this way: 2 clients x 4K = 2400MB/s + 6 clients x 2K = 1800MB/s; therefore a total throughput of 4200MB/s is required.

Since all storage vendors know what their products can do in regard to IOPS or random throughput, a vendor may tell the customer that they need, for example, two RAIDs, each providing 2500MB/s. Combined, that will provide the requested 4200MB/s, plus 800MB/s of overhead. For a standard IT-based environment, this calculation might be correct, and the SAN would probably be perfectly suitable. But when it comes to sequential and concurrent data access by more than one client at a time, the entire setup becomes a whole different beast. With a setup like this, the post-production facility will realize a couple of weeks after the SAN has been deployed that the performance is far below the eight concurrent streams they actually needed.

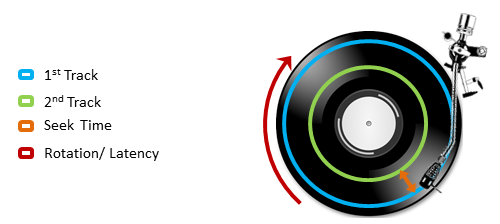

What went wrong? Our example customer XYZ’s requirements have not been accounted for, or – even worse – were not understood in full. To explain this in a pictographic example, customer XYZ needed a setup that was able to play two songs from a record at the same time – and vendor ZYX provided one turntable record player. Now the customer has to lift the arm of the record player (seeking) and move it to the new position on the record (latency), then lift the arm again to return it to the original position (seeking and latency) and start from the beginning. Every time the arm is lifted, there will be no music playing, no matter how fast the arm is moved.

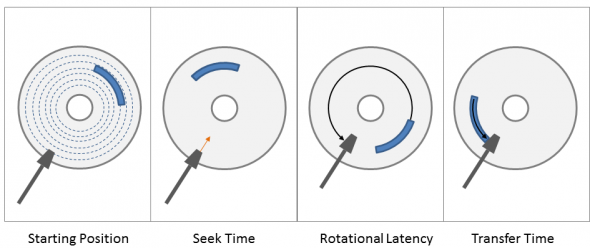

The effect is basically the same with spinning disks in a RAID system. As soon as two or more clients access a shared SAN volume simultaneously, the drive heads seek to either read or write the requested data, so every operation becomes a four-step process: read/write data from client A, send the heads to the position client B requested, and then move back to the original position to continue sending data to client A. Even the fastest spinning disks will quickly show seek latencies, as disk heads can only spin with a maximum 15000 RPM’s (or only 10000 or even 7200 RPM’s for the less expensive ones). It’s a simple physical, hence insurmountable, issue. The problem becomes visible when, every time the drive heads are in motion, data transfer to and from disks comes to a halt with literally no I/O. No music is playing. The result is stuttering playback, dropped frames, etc.

Apply these physics to a RAID system that delivers 1000MB/s but is now serving two clients, each requesting a 2K stream (300MB/s). Basically, both clients only get 25% of the bandwidth, while the remaining 50% bandwidth is lost due to head seeks and rotational latencies. That’s a devastating loss of performance.

In our sample case with eight clients attached to the shared storage, the rate of drive head seeks increases to 16 (eight head movements for each client and eight head movements with no I/O at all). As a result, the originally provided 5000MB/s throughput serves each client with only 312.5MB/s (or 6.25%) of the bandwidth. That’s far below the required 1200MB/s for a single 4K raw stream. And this is the best case scenario, assuming the underlying file system is entirely clean. If the file system shows even the slightest degree of fragmentation, the latencies will increase. The storage RAID provides exactly what vendor ZYX has promised, but the performance will not follow the math. It is physically impossible.

Be Aware of the Real-World Math

This example is, unfortunately, nothing out of the ordinary. It happens every day. Some RAID vendors understand the challenge, while other vendors don’t. Even worse, only a few storage vendors seem to fully understand how a proper test environment has to be set up to provide meaningful measurement results for post-production workflows. Just adding eight clients to a RAID subsystem and simulating eight concurrent streams is not going to represent a real-world scenario. Even if the RAID may have achieved eight 2K reads from the subsystem, it is important to know whether the RAID testing environment accounted for certain conditions, including settings and stipulations that may alter the test results.

For instance, almost every post-production application (such as Autodesk Smoke or Blackmagic DaVinci Resolve) utilizes internal buffers to prevent dropped frames. A 2K stream at 24fps usually requires 300MB/s, but in order to fill a buffer of eight frames, the application (or the client) will automatically add 100MB to the initial read request, because more bandwidth is required to fill the buffer. It’s the same for a 4K stream that usually requires 1200MB/s but suddenly needs 1600MB/s as the application adds 400MB/s to the read request to fill its eight-frame buffer. This means the RAID has to deliver a lot more overhead than originally assumed, just because of applications that try to prevent dropped frames. But what happens to those clients working with applications that don’t utilize buffers? Will they get any part in the action? Of course. However, the fewer buffers (and threads) an application utilizes, the more likely the client will show dropped frames and stuttering playback, as applications with a higher number of threads and buffers will take more of the storage performance.

No storage manufacturer has direct control over neither the amount of threads nor buffers the various applications use. However, it is possible to create workarounds that simulate real-life scenarios, allowing the proper testing of storage performance. Try to find out as many details as possible about the particular testing procedures of the vendor products you are interested in.

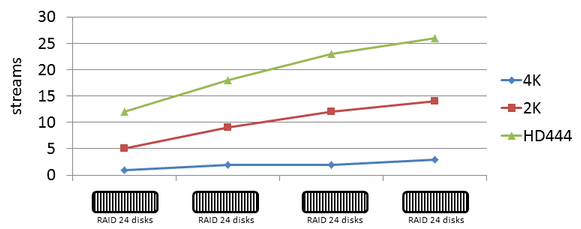

Another interesting and important physical property of spinning disks is the fact that performance doesn’t increase in a linear fashion. When designing your SAN and evaluating storage requirements, remember that doubling the number of RAIDs or disks will not result in twice the performance. That means 48 disks will not give you twice the performance of 24 disks, but maybe only 75% more.

If you plan to explore your SAN options at NAB, add these questions to your must-ask list when talking to storage vendors:

- Does the performance table of the vendor’s RAID subsystem clearly state that it can do X streams of 2K/4K (or whatever resolution is important to you) at specific frame rates?

- How will the stream count change if two or more clients are writing simultaneously?

- What happens if it is necessary to run X streams of 2K/30fps or even 50fps?

- Has performance loss due to file-system degradation been accounted for?

- What are the upgrade options to gain more performance?

As a rule of thumb, every client requiring a 2K raw real-time stream usually needs at least 300MB/s, while a 4K raw real-time streaming client will require 1200MB/s. Each client will still need overhead to cover latencies caused by the host bus adapter, fiber-channel switches and a potentially fragmented file system.

Some vendors may tell you that their system can manipulate certain firmware settings that alter the algorithm of the IO pattern or block size, disable particular housekeeping calls or disable cache mirroring, for example. Those settings will help to improve the performance for sequential access, for sure — but not over the long run. I’ve heard countless local admins complain about losing performance for no obvious reason — no additional clients have been added to the setup, nothing has changed regarding their workflow or workload, but nonetheless the system would still degrade in speed and overall performance.

Aside from a variety of hardware parts that can break over time (a SFP burns out, or certain blocks on a disk go bad, forcing the disk head to re-read those blocks over and over, slowing the entire RAID), a very common factor in “mysterious” performance loss is the underlying file system.

Each file on a file system will fragment over time (unless the file is only written once and will never be deleted), meaning single files will literally be split in hundreds of segments and the disk head has to seek and fly over the whole disk to grab all the pieces and deliver them to the requesting client. When working with 24 frames per second, every client has only 41.6ms time to find, read, process, and deliver one frame to the display. Remember our turntable from above? All parts of the song would be scattered across the record, and it would take a while to find the matching pieces to play the song.

Of course, there is a lot more to it. But hopefully this will provide an informative basis to make your NAB 2014 a successful endeavor where SAN storage solutions are concerned.